Improving GDS support for teams with lower digital capability

CollaborationFinding out the real problems

I joined the UK Government Digital Service (GDS) in August 2017 as a Service Designer. Lou Downe, Head of Design, asked me to find out what experience users had when interacting with GDS assurance and the support they received.

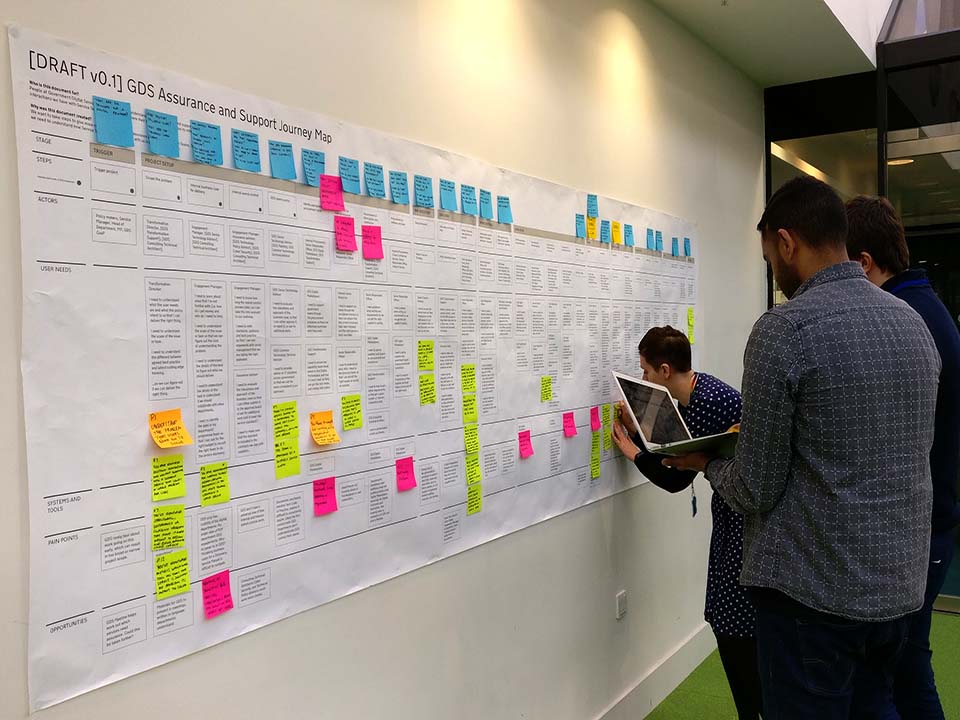

Without a dedicated team to run a Discovery, I needed help, so I brought people together from across GDS to map out the series of interactions users have with us.

There were three key findings:

- We were telling teams they aren't up to standards, but offered little help to teams that don't understand what to do differently, which stops them from progressing

- Our support was disjointed, meaning our users had to navigate our organisational structures to get what they need

- We had little visibility of services changing during the creation of policy, strategy and business cases, and as a result, our support was often arriving too late

These findings came from pulling together existing user research, as well as getting front-line delivery staff to help create maps of our services. This was a good start, but without speaking to our users in digital delivery teams across government, it presented quite a biased view. We needed to speak to users.

I worked with our lead user researcher to write a proposal to fund a Discovery team to help us learn more about our users. Improving GDS support for government was one of the organisation's strategic objectives, so it felt like a good time to pitch. In the meantime, I wanted to learn more about the specific types of GDS support.

I interviewed people at GDS who had been placed in other government organisations during the Transformation Programme from 2013 to 2015. I also interviewed people in government-facing roles at GDS, including content designers on GOV.UK, various technology advisors, and engagement people.

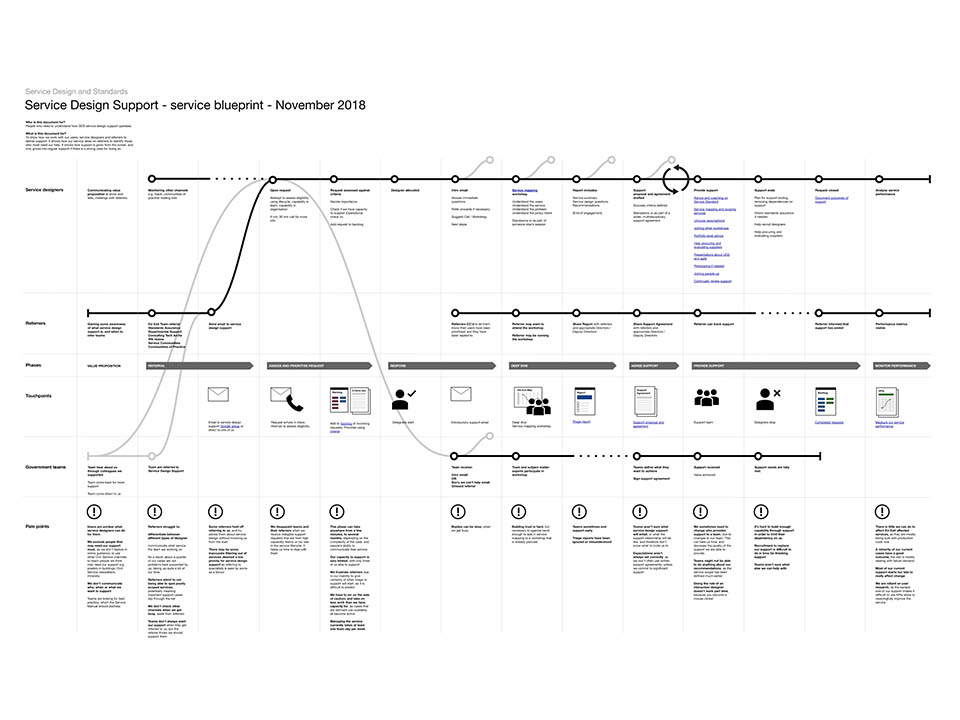

This revealed that the biggest problems with GDS support were happening when establishing and setting up support. This was common across all the support teams. The findings from the interviews pointed towards having organisational structures that enable good support service. In response, I wanted to test multi-disciplinary triage for incoming support requests.

The hypothesis:

because we know that GDS often misunderstand what government teams needs from our support if we triage incoming support requests with a multi-disciplinary team we will see a more accurate understanding of their problems, and establish better support for them

Having triaged a case properly, it should lead to the right support being offered. With an incoming support request from the Department for Environment, Food and Rural Affairs (Defra), I was able to test this hypothesis.

Testing possible solutions

We didn't yet have a full Discovery team, but Ben Tate, lead service designer, joined the team in January 2018, to help test different approaches to support for services affected by EU Exit. Demands from central government meant that GDS were expected to pause work on lower priority products, and place people into departments. This forced us to manage the risk of proceeding with assumptions about what users actually experienced when getting support, having only interviewed people providing support at GDS.

Despite not having answered all our research questions with as much confidence as we would have liked, the findings I had gathered so far were still useful for framing things to test. These were tests to help us learn how GDS could best approach supporting teams whose services were affected by EU Exit.

I tested triage with Defra, while Ben tested a more multi-disciplinary triage with the Medicines and Healthcare products Regulatory Agency. Through testing, we evolved our questions we deemed necessary to have answered before support can start.

When triage worked well, it helped us filter out teams that wouldn't benefit much from GDS support, routing them to more appropriate support, so we could help improve the services of greatest importance to users and to government.

Triage became a key part of our support service, as it allowed us to say no and focus our attention on the teams that would most benefit from our support. It also provided us the justification to reserve some of our time to be involved in things that helped us improve support, like user research and improving relationships with referrers.

Although multi-disciplinary triage and support worked best, it was too slow creating the organisational change necessary to enable this on an ongoing basis. Given the time pressures of EU Exit, we decided to focus on doing a good job of designing service design support, which was much more within our influence.

Running an Alpha

We had a hypothesis that good service design support would encompass a triage function for incoming cases, which could in turn refer people to:

- an existing service community

- help to start a service community

- help to map content to their service and create a step by step navigation on GOV.UK

- individual service designers that could provide more specialised support

To help us design this service, in June 2018, the team merged with service communities, gaining a user researcher, more designers, and a delivery manager. We wanted to continue testing different types of support, to help rule things in or out before growing or ideally scaling. This meant running an Alpha.

Outcomes of our Alpha

Our Alpha helped us join up with other support offers at GDS, where we could provide specialist 3rd line support, with the case having been referred to us by more generalist teams or front-line staff. Through working in the open, I could influence other teams to collaborate. This helped reduce the number of cases being dropped or lost between different teams, making users' experience of support much better.

To help users get support quicker, I designed a prototype step by step navigation for "Improve a government service", which I wanted to test with users across government. Username and Password: "gdsaas". It gives order to GDS products and services, situating them within the stages of service improvement.

During Alpha, were able to prototype and test various criteria, tools and processes to help our team manage service design support. We ended up with a slick operation where managing support only takes the team half a day per week. Each week, the team spend the other three days providing support, one day improving support, and half a day on other work admin / personal learning and development.

We found that good service design support:

- Advises and facilitates

- Is targeted between Live and Discovery

- Is proportionate to the priority of the service

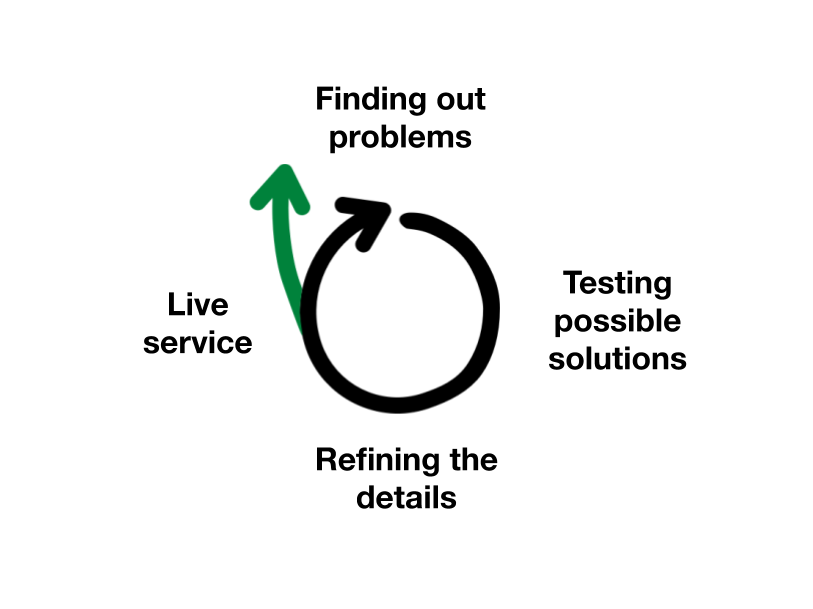

Our Alpha found that our support is best when we work with teams planning change to services, but before delivery teams are in place.

Diagram by Sanjay Poyzer.

After Alpha, we made changes to our triage process, filtering out even more teams. We no longer support teams during their Alphas and Private Betas. This is because we learnt that these teams were far less able to act on our recommendations, as they were already too committed on a particular solution or approach. We saw this through support cases, as well as in interviews with various referrers at GDS and across government.

Discoveries after Alpha

Our Alpha helped us understand the limitations of scaling support. The biggest limitation of the team is that each service designer can only support two teams, and triage two teams in any given week. We would have to grow the team substantially to have a sizeable impact, given there are thousands of government services. We've since been focusing our non-support work on learning what would enable the same outcomes at scale.

We've since ran a short Discovery to learn about the effectiveness of user-centred design during policy making. We knew from our Alpha that the biggest opportunities for service designers in central government to support teams were between Live and Discovery.

However, the activities between Live and Discovery are vast and numerous. Policies, strategies, and business cases are being written. Projects are being planned. People are procuring suppliers. A mix of some user research findings from Alpha, plus a bit of gut feeling told us that some of the biggest opportunities within this timespan lay in the policy making process.

We interviewed policy makers, some who collaborated with user-centred colleagues, and others who had never worked in this way. We also interviewed the people adapting user-centred design methods to the policy making process.

We learnt that policy teams collaborating with people with user-centred design skills were likely reducing the likelihood of unintended consequences with their policies. There is some really good work happening. However, there was no clear need for GDS to also launch some kind of policy lab.

We interpreted our user research findings as pointing more towards enabling effective service improvement through long-term collaboration between policy, digital delivery and operations teams. If our hypothesis is correct, it would potentially situate our team as being an initiator of collaboration, prior to establishing a more formal service community.

Running service design support

Support has been much easier to manage after our Alpha. Despite this, there is still plenty room for improvement. I capped the amount of support we provide to ensure we have time to continuously improve. I'm happy to say that our team takes pride in learning at pace, which not only helps us improve our ability to meet user needs, but it also makes work interesting and fun.

As the service design support lead, I really listen to what is said in our retrospectives, and ensure we are in a position to change as a team. I feel we are all ambitious about improving support, and can talk openly with honesty when things aren't working.

Leading the team has allowed me to be in a position where I can offer coaching to the other designers when needed. I've been really lucky working with amazing colleagues, so I only need to provide a clear understanding of the problems we're solving, and the opportunities we're aiming for. This way, they can apply their own design skills and experience to support.

I like helping other people develop, and have been able to use service design support as a platform for this. The types of challenge vary from case to case, so we can assign different people to different challenges, depending on what their strengths are, and what they want to learn.

Towards the end of my time on the team, I recognised that a senior service designer was going to quickly get bored, so I asked them how they felt about taking on some of my responsibilities as a product manager. Eventually, I made myself redundant, and was able to move onto a completely different challenge.

Sharing support patterns

Since we ran our Alpha, we have been sharing our approach. I hosted and presented at the GDS Open Show and Tell, talking about our approach publicly.

Nearly all the other support teams at GDS have noticed our model for support, and have been curious about how we created it. Whilst many our findings won't be directly applicable to their teams, there has been an interest in capping the amount of support teams do to enable continuous improvement, user research, and prototyping. This is common for agile teams, but these teams are usually more akin to operations teams, focusing on output rather than outcomes.

I felt like I'd failed when we stepped away from designing multi-disciplinary triage and support for EU Exit. Going from this to more recent successes made me immensely proud of our team. Starting small, proving the value, and growing outwards worked.

Next: Sketches for scaling digital public sector procurement